Although the name might confuse, please note that this is a classification algorithm.

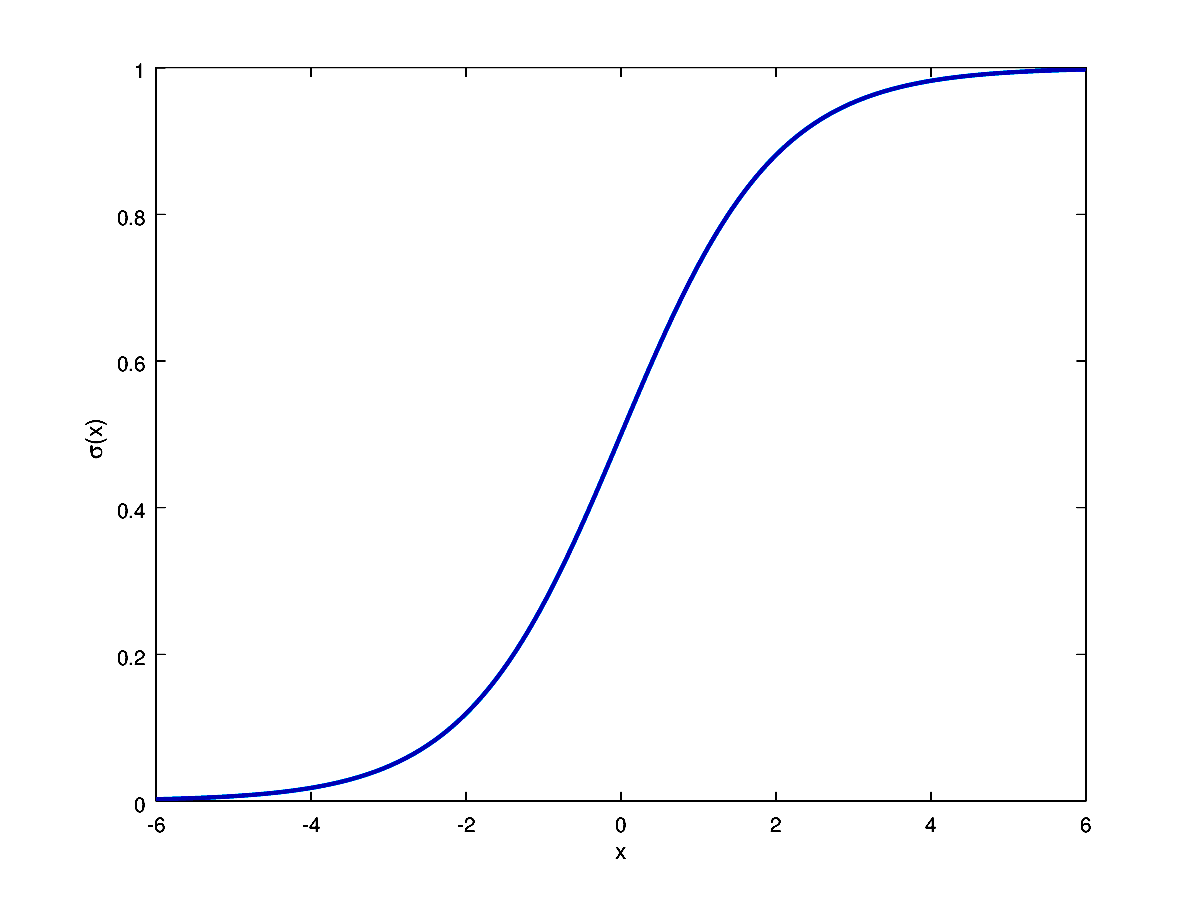

In Logistic Regression, we define a set weights w that should be combined (through a trivial dot product) with some features ϕ. Considering a problem of two-class classification, the posterior probability of class C1 can be written as a logistic sigmoid function:

p(C1|ϕ)=11+e−wTϕ=σ(wTϕ)

and p(C2|ϕ)=1−p(C1|ϕ)

Applying the Maximum Likelihood approach…

Given a dataset D={(ϕn,tn) ∀n∈[1,N]}, tn∈{0,1}, we have to maximize the probability of getting the right label:

P(t|Φ,w)=N∏n=1ytnn(1−yn)1−tn, yn=σ(wTϕn)

Taking the negative log of the likelihood, the cross-entropy error function can be defined and it has to be minimized:

L(w)=−lnP(t|Φ,w)=−N∑n=1(tnlnyn+(1−tn)ln(1−yn))=N∑nLn

Differentiating and using the chain rule:

∂Ln∂yn=yn−tnyn(1−yn), ∂yn∂w=yn(1−yn)ϕn∂Ln∂w=∂Ln∂yn∂yn∂w=(yn−tn)ϕ

The gradient of the loss function is

∇L(w)=N∑n=1(yn−tn)ϕn

It has the same form as the gradient of the sum-of-squares error function for linear regression. But in this case y is not a linear function of w and so, there is no closed form solution. The error function is convex (only one optimum) and can be optimized by standard gradient-based optimization techniques. It is, hence, easy to adapt to the online learning setting.

Talking about Multiclass Logistic Regression…

For the multiclass case, the posterior probabilities can be represented by a softmax transformation of linear functions of feature variables:

p(Ck|ϕ)=yk(ϕ)=ewTkϕ∑jewTjϕ

ϕ(x) has been abbreviated with ϕ for simplicity.

Maximum Likelihood is used to directly determine the parameters

p(T|Φ,w1,…,wK)=N∏n=1(K∏k=1p(Ck|ϕn)tnk)⏟=N∏n=1(K∏k=1ytnknk)Term for correct class

where ynk=p(Ck|ϕn)=ewTkϕn∑jewTjϕn

The cross-entropy function is:

L(w1,…,wK)=−lnp(T|Φ,w1,…,wK)=−N∑n=1(K∑k=1tnklnynk)

Taking the gradient

∇Lwj(w1,…,wK)=N∑n=1(ynj−tnj)ϕn