When talking about binary classification, an hypothesis is a function that maps an input from the entire input space to a result:

h:X→{−1,+1}

A dichotomy is a hypothesis that maps from an input from the sample size to a result:

h:{x1,x2,…,xN}→{−1,+1}

The number of dichotomies |H(x1,x2,…,xN)| is at most 2N, where N is the sample size.

e.g. for a sample size N=3 we have at most 8 possible dichotomies:

x1 x2 x3

1 -1 -1 -1

2 -1 -1 +1

3 -1 +1 -1

4 -1 +1 +1

5 +1 -1 -1

6 +1 -1 +1

7 +1 +1 -1

8 +1 +1 +1

The growth function is a function that counts the most dichotomies on any N points.

mH(N)=maxx1,…,xN∈X|H(x1,…,xN)|

The growth function satisfies:

mH(N)≤2N

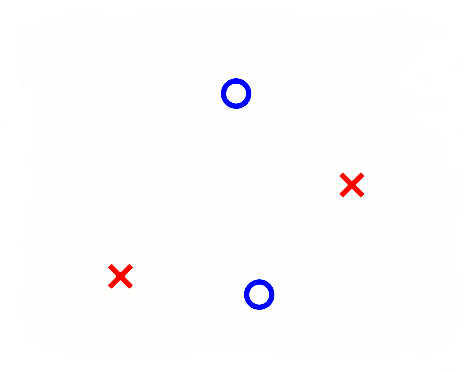

This is where the perceptron breaks down because it cannot separate that configuration, and so mH(4)=14 because two configurations—this one and the one in which the left/right points are blue and top/bottom are red—cannot be represented. For this reason, we have to expect that for perceptrons, m can’t be 24.

The VC ( Vapnik-Chervonenkis ) dimension of a hypothesis set H , denoted by dVC(H) is the largest value of N for which mH(N)=2N , in other words is “the most points H can shatter”

We can say that the VC dimension is one of many measures that characterize the expressive power, or capacity, of a hypothesis class.

You can think of the VC dimension as “how many points can this model class memorize/shatter?” (a ton? → BAD! not so many? → GOOD!).

With respect to learning, the effect of the VC dimension is that if the VC dimension is finite, then the hypothesis will generalize:

dvc(H) ⟹ g∈H will generalize

The key observation here is that this statement is independent of:

- The learning algorithm

- The input distribution

- The target function

The only things that factor into this are the training examples, the hypothesis set, and the final hypothesis.

The VC dimension for a linear classifier (i.e. a line in 2D, a plane in 3D etc…) is d+1 (a line can shatter at most 2+1=3 points, a plane can shatter at most 3+1=4 points etc…)

Proof: here

How many randomly drawn examples suffice to guarantee error of at most ϵ with probability at least (1−δ)?

N≥1ϵ(4log(2δ)+8VC(H)log2(13ϵ))

PAC BOUND using VC dimension:

Ltrue(h)≤Ltrain(h)+√VC(H)(ln2NVC(H)+1)+ln4δN